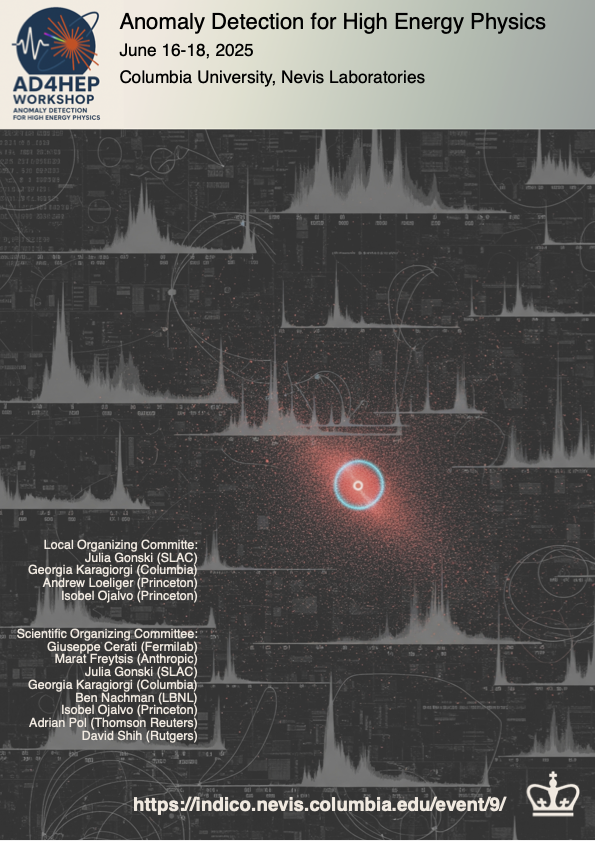

Anomaly Detection for High Energy Physics (AD4HEP) Workshop

Nevis Science Center

Columbia University, Nevis Laboratories

The AD4HEP Workshop brings together people working on and/or interested in experimental and theoretical/phenomenology aspects of anomaly detection in high energy physics or closely related fields.

The workshop includes invited plenary talks and plenary early career-contributed lightning talks, as well as useful hands-on tutorials for anomaly detection applications.

The workshop aims to connect and brew a community of scientists spanning experiment, theory and industry who are interested in a new paradigm for physics discoveries in particle physics and beyond, and to catalyze collaboration to develop new frameworks and tools for model-agnostic searches for new physics.

Scientific Organizing Committee:

Giuseppe Cerati, Fermi National Accelerator Laboratory

Marat Freytsis, Anthropic

Julia Gonski, SLAC National Accelerator Laboratory

Georgia Karagiorgi, Columbia University

Ben Nachman, Lawrence Berkeley National Laboratory

Isobel Ojalvo, Princeton University

Adrian Pol, Thomson Reuters

David Shih, Rutgers University

Local Organizing Committee:

Julia Gonski, SLAC National Accelerator Laboratory

Georgia Karagiorgi, Columbia University

Andrew Loeliger, Princeton University

Isobel Ojalvo, Princeton University

Jon Sensenig, Columbia University

-

-

Opening Remarks Nevis Science Center

Nevis Science Center

Columbia University, Nevis Laboratories

Convener: Georgia Karagiorgi (Columbia University) -

Physics Results with AD Nevis Science Center

Nevis Science Center

Columbia University, Nevis Laboratories

Convener: Julia Gonski (SLAC National Accelerator Laboratory)-

1

Machine Learning-Driven Anomaly Detection in Dijet Events with ATLAS

This contribution discusses an anomaly detection search for narrow-width resonances beyond the Standard Model that decay into a pair of jets. Using 139 fb−1 of proton-proton collision data at sqrt(s) = 13 TeV, recorded from 2015 to 2018 with the ATLAS detector at the Large Hadron Collider, we aim to identify new physics without relying on a specific signal model. The analysis employs two machine learning strategies to estimate the background in different signal regions, with weakly supervised classifiers trained to differentiate this background estimate from actual data. We focus on high transverse momentum jets reconstructed as large-radius jets, using their mass and substructure as classifier inputs. After a classifier-based selection, we analyze the invariant mass distribution of the jet pairs for potential local excesses. Our model-independent results indicate no significant local excesses and we inject a representative set of signal models into the data to evaluate the sensitivity of our methods. This contribution discusses the used methods and latest results and highlights the potential of machine learning in enhancing the search for new physics in fundamental particle interactions.

Speaker: Dennis Noll - 2

-

3

Compare/contrast ATLAS YXH and SVJ searchesSpeaker: Gabriel Matos (Columbia University)

-

4

Anomaly detection in Jet+X with autoencoder at ATLAS and Deployment of the ADFilter Tool

This work presents a strategy for model-independent searches for new physics using deep unsupervised learning techniques at the LHC. The first study applies a deep autoencoder to 140fb^-1 of sqrt(s)=13TeV pp collision data recorded by the ATLAS detector, selecting outlier events that deviate from the dominant Standard Model kinematics. Invariant mass distribuations of various two-body systems - such as jet+jet, jet+lepton, and b-jet+photon - are analyzed in these anomalous regions to search for resonant structures, setting 95% CL upper limits on BSM cross sections. Complementing this, a second study introduces ADFilter, a public web-based tool enabling theorists and experimentalists to evaluate BSM model sensitivity using trained autoencoders. ADFilter processes event-level data through a standardized pipeline - including rapidity-mass matrix construction, anomaly scoring, and cross-section evaluation - allowing rapid esimation of anomaly region acceptances and facilitating reinterpretation of published ATLAS results.

Speaker: Edison Weik (New York University)

-

1

-

15:00

Coffee Break Nevis Science Center

Nevis Science Center

Columbia University, Nevis Laboratories

-

AD for Instrumentation Nevis Science Center

Nevis Science Center

Columbia University, Nevis Laboratories

Convener: Isobel Ojalvo (Princeton University)- 5

- 6

-

7

CMS CICADA TriggerSpeakers: Kiley Kennedy, Kiley Kennedy (Princeton University (US))

-

8

Towards a Self-Driving Trigger: Adaptive Response to Anomalies in Real Time

Recent efforts in high energy physics have explored a variety of machine learning approaches for detecting anomalies in collision data. While most are designed for offline analysis, applications at the trigger level are emerging. In this work, we introduce a novel framework for autonomous triggering that not only detects anomalous patterns directly at the trigger level but also determines how to respond to them in real time. We develop and benchmark an autonomous, or self-driving trigger framework that integrates anomaly detection with real-time control strategies, dynamically adjusting trigger thresholds and allocations in response to changing conditions. Using feedback-based control and resource-aware optimization that accounts for trigger bandwidth and compute constraints, our system maintains stable trigger rates while enhancing sensitivity to rare or unexpected signals. This work represents a step toward scalable, adaptive trigger systems and highlights new directions that harness the full potential of anomaly detection for real-time data acquisition.

Speaker: Cecilia Tosciri (University of Chicago) -

9

Anomaly detection at an X-ray FEL

Modern light sources produce too many signals for a small operations team to monitor in real time. As a result, recovering from faults can require long downtimes, or even worse, performance issues may persist undiscovered. Existing automated methods tend to rely on pre-set limits which either miss subtle problems or produce too many false positives. AI methods can solve both problems, but deep learning techniques typically require extensive labeled training sets, which may not exist for anomaly detection tasks. Here we will show work on unsupervised AI methods developed to find subsystem faults at the Linac Coherent Light Source (LCLS). Whereas most unsupervised AI methods are based on distance or density metrics, we will describe a coincidence-based method that identifies faults through simultaneous changes in sub-system and beam behavior. We have applied the method to radio-frequency (RF) stations faults — the most common cause of performance drops at LCLS — and find that the proposed method can be fully automated while identifying 3 times more events with 6x fewer false positives than the existing alarm system. Clustering the identified anomalies can also provide additional granularity into the underlying root cause of failure.

Speaker: Daniel Ratner (SLAC)

-

Reception Hamilton House

Hamilton House

Columbia University, Nevis Laboratories

-

-

-

08:30

Breakfast Nevis Science Center

Nevis Science Center

Columbia University, Nevis Laboratories

-

New Directions in AD Nevis Science Center

Nevis Science Center

Columbia University, Nevis Laboratories

Convener: David Shih- 10

-

11

AD Interpretation & PhenomenologySpeaker: Anna Hallin

-

12

Incorporating Physical Priors into Weakly-Supervised Anomaly Detection

Weakly-supervised anomaly detection methods offer a powerful approach for discovering new physics by comparing data to a background-only reference. However, the sensitivity of existing strategies can be significantly limited by rare signals or high-dimensional, noisy feature spaces. We present Prior-Assisted Weak Supervision (PAWS), a novel machine-learning technique that significantly boosts search sensitivity by incorporating physical priors from a class of signal models into the weakly-supervised framework. PAWS pre-trains parameterized neural network classifiers on simulated signal events and then fine-tunes these models to distinguish data from a background reference, with the signal parameters themselves being learnable during this second stage. This approach allows PAWS to achieve the sensitivity of a fully-supervised search for signals within the pre-specified class, without needing to know the exact signal parameters in advance. Applied to the LHC Olympics anomaly detection benchmark, PAWS extends the discovery reach by a factor of 10 in cross section over previous methods. Crucially, PAWS demonstrates remarkable robustness to irrelevant noise features, unlike traditional methods whose performance degrades substantially. This work highlights the power of integrating domain knowledge into machine learning models for high energy physics, offering a promising path towards more sensitive and robust anomaly detection in jet-based searches and beyond.

Speaker: Chi Lung Cheng Cheng (University of Wisconsin-Madison) -

13

Surrogate Simulation-based Inference (S2BI)

Modern simulation-based inference (SBI) is a suite of tools to scaffold physics-based simulations with neural networks and other tools to perform inference using as much information as possible. We extend this toolkit to the case where some or all of the simulations are actually surrogate models learned directly from the data. This Surrogate Simulation-based Inference (S2BI) concept is studied in the context of resonant anomaly detection, where the background simulation is learned from sidebands. The signal simulation is also learned from data by extending the Residual Anomaly Detection with Density Estimation (R-ANODE) in the context of S2BI. A key challenge with S2BI is that the inference task can be distracted by inaccuracies of the simulation. This is especially acute in the flexible signal case, as the signal probability density can readily absorb artifacts from the learned background model. One solution is to encode prior information into the signal simulation, limiting flexibility enough to avoid absorbing background deficiencies, but still able to find anomalies. We study this idea by extending the Prior-Assisted Weak Supervision (PAWS) method in the context of S2BI. Overall, we find that both methods achieve excellent sensitivity and now allow for direct statistical analysis from their output. S2BI PAWS achieves discovery sensitivity down to an initial signal-to-noise ratio of 0.1. This performance, combined with its statistically robust and interpretable outputs, establishes a new state-of-the-art for anomaly detection sensitivity.

Speaker: Runze Li (Yale University) -

14

From High Dimensions to Statistical Discovery: A Contrastive Learning Approach to Anomaly Detection

Anomaly detection in high energy physics (HEP) and many other scientific fields, is challenged by rare signals found in high-dimensional data. Two main strategies have emerged to mitigate the curse of dimensionality: scaling detection methods to handle high dimensions, or reducing the dimensionality before statistical analysis.

This talk focuses on the latter, introducing a supervised contrastive learning framework that builds low-dimensional embeddings informed by known physics labels (e.g., backgrounds). In absence of a priori knowledge on the shape and location of a putative new signal in the constructed space, we perform a signal agnostic Neyman-Pearson two-sample test using the NPLM algorithm to identify statistically significant anomalous regions.

We validate our method on high-dimensional datasets including jet- and event-level LHC data, as well as LIGO and histology datasets, achieving high sensitivity to rare anomalies across all domains. Our results emphasize the value of physics-informed data compression and the critical role of domain knowledge in enhancing AI-driven discovery. Building on this work, we outline a strategy towards integration of domain adaptation and systematic uncertainties, paving the way for broader applicability in real-world discovery scenarios.Speaker: Gaia Grosso (IAIFI/MIT/Harvard)

-

10:30

Coffee Break Nevis Science Center

Nevis Science Center

Columbia University, Nevis Laboratories

-

Early Career Session: Anomaly Detection in HEP and Beyond: Block 1 Nevis Science Center

Nevis Science Center

Columbia University, Nevis Laboratories

Convener: Kiley Kennedy-

15

Latest improvements to CATHODE

The search for physics beyond the Standard Model remains one of the primary focus in high-energy physics. Traditional searches at the LHC analyses, though comprehensive, have yet to yield signs of new physics. Anomaly detection has emerged as a powerful tool to widen the discovery horizon, offering a model-agnostic path as way to enhance the sensitivity of generic searches not targeting any specific signal model. One of the leading methods, CATHODE (Classifying Anomalies THrough Outer Density Estimation), is a two-step anomaly detection framework that constructs an in-situ background estimate using a generative model, followed by a classifier to isolate potential signal events.

We present the latest developments to the CATHODE method, aimed to increase its robustness broadening its applicability. These improvements expand its reach to new topologies with new input variables covering all particles in the event.Speaker: Chitrakshee Yede (Universität Hamburg) -

16

Self-supervised mapping of space-charge distortions in Liquid Argon TPCs

We introduce an end-to-end, self-supervised neural workflow that learns the three-dimensional electric-field distortion in a Liquid Argon TPC directly from through-going cosmic muon tracks. The network uses geometric straightness and boundary conditions to generate its own target undistorted paths and iteratively refine them. In this talk, I will present the architecture of the model, detail the self-supervised training process and analyze its performance on SBND-scale simulations.

Speaker: Jack Cleeve -

17

Unaccounted-for look-elsewhere effect in k-fold cross adaptive anomaly searches

Recently a technique based on k-fold cross validation has become popular in anomaly detection in HEP, as a way to search for a wide class of anomalies in the data, without paying an associated penalty in sensitivity-depth. In this work, we point out that the breadth-depth tradeoff is an unavoidable aspect of anomaly detection, and cannot be overcome using the aforementioned k-fold cross adaptive search technique. Furthermore, we show that the technique leads to unaccounted-for look-elsewhere effect, i.e., the underestimation of p-values or the overestimation of significances of observed anomalies.

Speaker: Prasanth Shyamsundar (Fermi National Accelerator Laboratory) -

18

Recent Advances in Resonant Anomaly Detection

Based on R-ANODE(https://arxiv.org/abs/2312.11629) and SIGMA(https://arxiv.org/abs/2410.20537).

Speaker: Ranit Das (Rutgers University) -

19

RL-Driven Anomaly Detection for Adaptive Trigger Menus at the LHC

The Large Hadron Collider (LHC) produces collision data at the rate of 40 MHz, making it infeasible to store or analyze all events. Conventional trigger strategies at the experiments operating at the LHC typically rely on predefined physics signatures to decide which events to retain. However, possible manifestations of new physics may not conform to these established patterns and thus risk being discarded. Anomaly detection in the trigger menu is essential for identifying rare and potentially new physics events that may evade traditional selection criteria.

We propose a reinforcement learning (RL)-based approach that autonomously learns to detect and respond to anomalous patterns in real time at the trigger level. Our RL agent is trained to identify deviations from expected event distributions and adapt trigger thresholds dynamically based on detector conditions and feedback. Unlike static rule-based systems, the RL agent continuously optimizes its policy to balance trigger bandwidth, compute constraints, and physics sensitivity. This approach enhances the LHC’s ability to capture previously unseen physics processes while maintaining operational stability. Our approach represents a step toward intelligent, scalable trigger systems that maximize discovery potential without relying on rigid and handcrafted selection rules.Speaker: Zixin Ding (University of Chicago)

-

15

-

Nevis Labs Summer BBQ Picnic Area

Picnic Area

Columbia University, Nevis Laboratories

-

Panel: Lessons Learned in AD Nevis Science Center

Nevis Science Center

Columbia University, Nevis Laboratories

Convener: Julia Gonski (SLAC National Accelerator Laboratory) -

15:30

Coffee Break Nevis Science Center

Nevis Science Center

Columbia University, Nevis Laboratories

-

Hands-on Tutorial: 1. AD Exercises with CMS Open Data Nevis Science Center

Nevis Science Center

Columbia University, Nevis Laboratories

Convener: Andrew Loeliger (Princeton University (US)) -

18:00

Social Outing (TBD) Nevis Science Center

Nevis Science Center

Columbia University, Nevis Laboratories

-

08:30

-

-

08:30

Breakfast Nevis Science Center

Nevis Science Center

Columbia University, Nevis Laboratories

-

Hands-on Tutorial: 2a. MicroBooNE Open Samples Nevis Science Center

Nevis Science Center

Columbia University, Nevis Laboratories

Conveners: Jack Cleeve, Seokju Chung (Columbia University) -

Hands-on Tutorial: 2b. AD Implementation on FPGA: A Walkthrough Exercise Nevis Science Center

Nevis Science Center

Columbia University, Nevis Laboratories

Conveners: Jack Cleeve, Seokju Chung (Columbia University) -

10:00

Coffee Break Nevis Science Center

Nevis Science Center

Columbia University, Nevis Laboratories

-

Early Career Session: Anomaly Detection in HEP and Beyond: Block 2 Nevis Science Center

Nevis Science Center

Columbia University, Nevis Laboratories

Convener: Andrew Loeliger (Princeton University (US))-

20

Isolating Unisolated Upsilons with Anomaly Detection in CMS Open Data

We present the first study of anti-isolated Upsilon decays to two muons (Υ → μ+μ−) in proton-proton collisions at the Large Hadron Collider. Using a machine learning (ML)-based anomaly detection strategy (CATHODE), we “rediscover” the Υ in 13 TeV CMS Open Data from 2016, despite overwhelming anti-isolated backgrounds. CATHODE can elevate the signal significance to 6.4σ (starting from 1.6σ using the dimuon mass spectrum alone), far outperforming classical cut-based methods on individual observables. Our work demonstrates that it is possible and practical to find real signals in experimental collider data using ML-based anomaly detection. Additionally, we distill a readily-accessible benchmark dataset from the CMS Open Data to facilitate future anomaly detection development. THIS TALK IS THE FIRST OF TWO PARTS.

Speaker: Radha Mastandrea (UC Berkeley) -

21

Likelihood-based Reweighting for Improved sensitivity in Anomaly Detection

Many Machine Learning (ML)-based Anomaly Detection (AD) methods are based on "extended bump hunt", where ML cuts are used to enhance the significance of signal in a bump. We show how, by taking advantage of the fact that ML methods (e.g. CATHODE with CWoLa) often involve learning the likelihood ratio of the signal and background hypotheses, events can be weighted rather than cut for improved (and in some cases, optimal) sensitivity. Moreover, this removes the need to manually select cuts or working points. We demonstrate this improvement in using CATHODE to find the Upsilon in CMS Open Data, where likelihood reweighting improves the ordinary sensitivity from 5.7σ to 6.4σ. THIS TALK IS THE SECOND OF TWO PARTS.

Speaker: Rikab Gambhir (Massachusetts Institute of Technology) -

22

Detector-Aware Anomaly Detection in Future Colliders with Synthetic Representations

Physically-grounded anomaly detection requires reconstruction pipelines that preserve, rather than obscure, detector information. I introduce a two-stage strategy that inserts a synthetic, detector-aware representation (S) between the raw calorimeter readout (D) and low-dimensional truth (T). I demonstrate the concept in full simulation of a segmented dual-readout crystal ECAL for future colliders, where S is built from images of scintillation and Cerenkov photon tracks inside the detector. These features encode the physics of the conventional dual-readout correction but are inaccessible in real data. A first U-Net implementation on D -> S -> T reproduces the principles of dual-readout correction, giving the network an interpretable physical anchor. Because outliers are expressed in terms of concrete detector processes, this approach makes possible more reliable, explainable anomaly detection than purely latent-space methods. I will present the first qualtitative results and outline next steps, setting the stage for detector-aware AD at future colliders.

Speaker: Wonyong Chung (Princeton University) -

23

Real-time anomaly detection on Liquid Argon Time Projection Chamber wire data

Real-time anomaly triggers based on autoencoders have been successfully demonstrated at the CMS experiment, including CICADA (operating on raw calorimeter data from ECAL and HCAL) and AXOL1TL (operating on trigger-level reconstructed objects such as electrons, taus, and jets). Motivated by the CICADA project, we explore the feasibility of applying similar techniques to raw wire data, which carry ionization energy deposition information, from Liquid Argon Time Projection Chambers (LArTPCs). In this talk, I will present the physics performance of such networks and share initial benchmarks toward hardware implementation and acceleration in FPGAs.

Speaker: Seokju Chung (Columbia University) -

24

Interpretable time series foundation models for anomaly prediction in sPHENIX

This work introduces a new interpretable time series foundation model, and its application to anomaly prediction for sPHENIX - a recently commissioned detector at the RHIC facility at BNL. Our goal is to monitor the detector’s operational status, identify early warning signs, and predict potential anomalies. Despite diverse time series modeling techniques, existing models are black boxes and fail to provide insights and explanations about their representations. We present VQShape, a pre-trained, generalizable, and interpretable model for time-series representation learning and classification. By introducing a novel representation for time-series data, we forge a connection between the latent space of VQShape and shape-level features. Using vector quantization, VQShape encodes time series from diverse domains into a shared set of low-dimensional codes, each corresponding to an abstract shape in the time domain. codes, where each code can be represented as an abstracted shape in the time domain. This enables the model not only to predict anomalies but also to highlight important precursor patterns, offering explanations for its predictions.

Speaker: Tengfei Ma (Stony Brook University) -

25

Unveiling New Physics Models through meson decays and their impact on neutrino experiments

As neutrino experiments enter into the precision era, it is desirable to identify deviations from data in comparison to theory as well as provide possible models as explanation. Particularly useful is the description in terms of non-standard interactions (NSI), which can be related to neutral (NC-NSI) or charged (CC-NSI) currents. Previously, we have developed the code eft-neutrino that connects NSI with generic UV models at tree-level matching. In this work, we integrate our code with other tools, increasing the matching between the UV and IR theories to one-loop level. As working example, we consider the pion and kaon decay, the main production mechanisms for accelerator neutrino experiments. We provide up-to-date allowed regions on a set of Wilson coefficients related to pion and kaon decay. We also illustrate how our chain of codes can be used in particular UV models, showing that promising large value for CC-NSI can be misleading when considering a specific UV model.

Speaker: Adriano Cherchiglia (Universidade Estadual de Campinas)

-

20

-

Closeout Nevis Science Center

Nevis Science Center

Columbia University, Nevis Laboratories

Conveners: Georgia Karagiorgi (Columbia University), Isobel Ojalvo (Princeton University), Julia Gonski (SLAC National Accelerator Laboratory)

-

08:30